Since a few months I work with non-Microsoft technologies and operating systems. I work with Linux, Puppet, Docker (lightweight Linux containers), Apache, Nginx, Node.js and other. So far, it is fun and I’ve learned a lot. This week I saw a lot of news and buzz around OWIN and Katana project. It seems that OWIN is a hot topic and I decided to give it a try. In this post I will show you how to build OWIN implementation and use Nginx server.

Note: this is proof of concept rather than a production-ready code.

OWIN is a specification that defines a standard interface between .NET web servers and web applications. Its goal is to provide a simple and decoupled way how web frameworks and web servers interact. As the specification states, there is no assembly called OWIN.dll or similar. It is just a way how you can build web applications without dependency on particular web server. Concrete implementations can provide OWIN.dll assembly though.

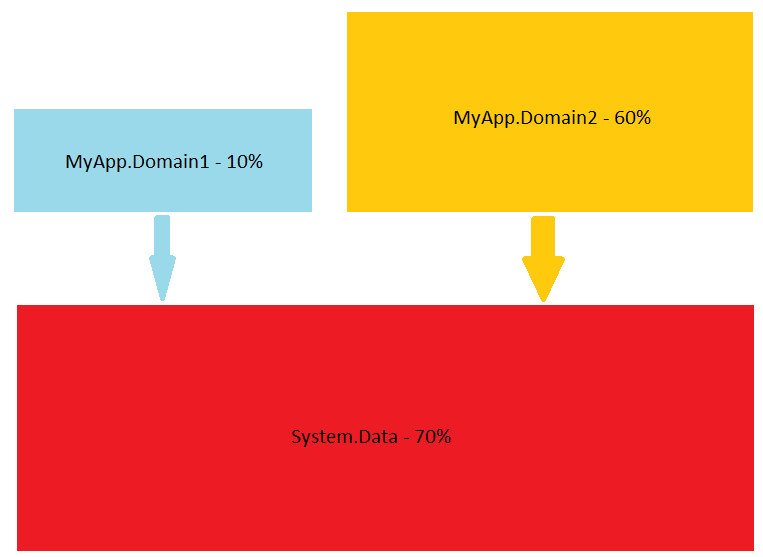

This is in a contrast with the traditional ASP.NET applications that have a dependency on System.Web.dll assembly. If implemented correctly OWIN eliminates such dependencies. The benefits are that your web application becomes more portable, flexible and lightweight.

Let’s start with the implementation. I modeled my OWIN implementation after the one provided by Microsoft.

public interface IAppBuilder

{

IDictionary<string, object> Properties { get; }

object Build(Type returnType);

IAppBuilder New();

IAppBuilder Use(object middleware, params object[] args);

}

For the purpose of this post we will implement the Properties property and the Use method. Let’s define our AppFunc application delegate as follows:

delegate Task AppFunc(IDictionary<string, object> environment);

The examples from Katana project provide the following code template for the main function:

static void Main(string[] args)

{

using (WebApplication.Start<Startup>("http://localhost:5000/"))

{

Console.WriteLine("Started");

Console.ReadKey();

Console.WriteLine("Stopping");

}

}

I like it very much so I decided to provide WebApplication class with a single Start method:

public static class WebApplication

{

public static IDisposable Start(string url)

{

return new WebServer(typeof(TStartup));

}

}

We will provide WebServer implementation later. Let’s see what the implementation of Startup class is:

public class Startup

{

public void Configuration(IAppBuilder app)

{

var myModule = new LightweightModule();

app.Use(myModule);

}

}

Let’s summarize it. We have a console application and in the main method it calls Start method and passes two parameters: Startup type and a URL. Start method will start a web server that listens for requests on the specified URL and the server will use Startup class to configure the web application. We don’t have any dependency on System.Web.dll assembly. We have a nice and simple decoupling of the web server and the web application.

So far, so good. Let’s see how the web server configures the web application. In our OWIN implementation we will use reflection to reflect TStartup type and try find Configuration method using naming convention and predefined method signature. The Configuration method instantiates LightweightModule object and passes it to the web server. The web server will inspect the object for its type and will try to find Invoke method compatible with the AppFunc signature. Once Invoke method is found it will be called for every web request. Here is the actual Use method implementation:

public IAppBuilder Use(object middleware, params object[] args)

{

var type = middleware.GetType();

var flags = BindingFlags.Instance | BindingFlags.Public;

var methods = type.GetMethods(flags);

// TODO: call method "void Initialize(AppFunc next, ...)" with "args"

var q = from m in methods

where m.Name == "Invoke"

let p = m.GetParameters()

where (p.Length == 1)

&& (p[0].ParameterType == typeof(IDictionary<string, object>))

&& (m.ReturnType == typeof(Task))

select m;

var candidate = q.FirstOrDefault();

if (candidate != null)

{

var appFunc = Delegate.CreateDelegate(typeof(AppFunc), middleware, candidate) as AppFunc;

this.registeredMiddlewareObjects.Add(appFunc);

}

return this;

}

Finally we come to WebServer implementation. This is where Nginx comes. For the purpose of this post we will assume that Nginx server is started and configured. You can easily extend this code to start Nginx via System.Diagnostics.Process class. I built and tested this example with Nginx version 1.4.2. Let’s see how we have to configure Nginx server. Open nginx.conf file and find the following settings:

server {

listen 80;

server_name localhost;

and change the port to 5000 (this is the port we use in the example). A few lines below you should see the following settings:

location / {

root html;

index index.html index.htm;

}

You should modify it as follows:

location / {

root html;

index index.html index.htm;

fastcgi_index Default.aspx;

fastcgi_pass 127.0.0.1:9000;

include fastcgi_params;

}

That’s all. In short, we configured Nginx to listen on port 5000 and configured fastcgi settings. With these settings Nginx will pass every request to a FastCGI server at 127.0.0.1:9000 using FastCGI protocol. FastCGI is a protocol for interfacing programs with a web server.

So, now we need a FastCGI server. Implementing FastCGI server is not hard but for the sake of this post we will use SharpCGI implementation. We are going to use SharpCGI library in WebServer implementation. First, we have to start listening on port 9000:

private void Start()

{

var config = new Options();

config.Bind = BindMode.CreateSocket;

var addr = IPAddress.Parse("127.0.0.1");

config.EndPoint = new IPEndPoint(addr, 9000);

config.OnError = Console.WriteLine;

Server.Start(this.HandleRequest, config);

}

The code is straightforward and the only piece we haven’t look at is HandleRequest method. This is where web requests are processed:

private void HandleRequest(Request req, Response res)

{

var outputBuff = new byte[1000];

// TODO: use middleware chaining instead a loop

foreach (var appFunc in this.appBuilder.RegisteredMiddlewareObjects)

{

using (var ms = new MemoryStream(outputBuff))

{

this.appBuilder.Properties["owin.RequestPath"] = req.ScriptName.Value;

this.appBuilder.Properties["owin.RequestQueryString"] = req.QueryString.Value;

this.appBuilder.Properties["owin.ResponseBody"] = ms;

this.appBuilder.Properties["owin.ResponseStatusCode"] = 0;

var task = appFunc(this.appBuilder.Properties);

// TODO: don't task.Wait() and use res.AsyncPut(outputBuff);

task.Wait();

res.Put(outputBuff);

}

}

}

This was the last piece from our OWIN implementaion. This is where we call the web application specific method via AppFunc delegate.

In closing I think OWIN helps the developers to build better web applications. Please note that my implementation is neither complete neither production-ready. There is a lot of room for improvement. You can find the source code here:

OWINDemo.zip

OWINDemo.zip