Bradley Braithwaite wrote an excellent blog post about the top 5 TDD mistakes. Check it out.

Preventing Stack Corruption

I recently investigated stack corruption issue related to P/Invoke. In this post I am going to share my experience. I will show you a simple and yet effective approach to avoid similar problems.

The Bug

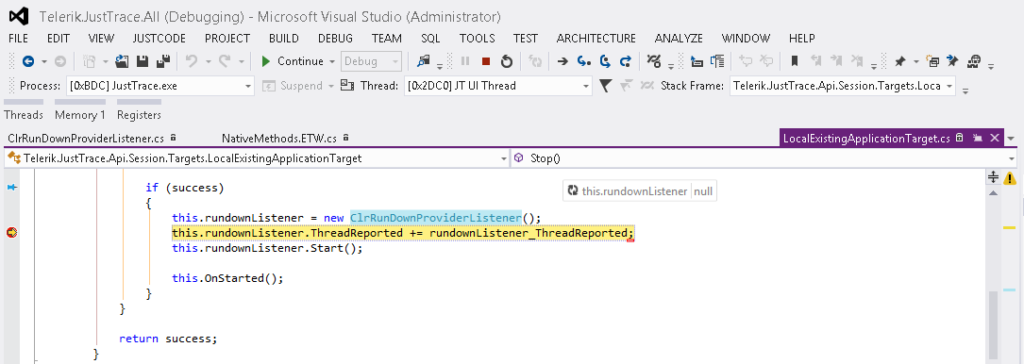

A colleague of mine discovered the bug during debugging piece of code in JustTrace dealing with ETW. The issue was quite tricky because the bug manifested only in debug build by crashing the program. Considering that JustTrace requires administrator privileges I can only guess what could be the consequence of this bug when executed in release build. Take a look at code fragment shown on the following screen shot.

The code is single threaded and looks quite straightforward. It instantiates an object and tries to use it. The constructor is executed without any exceptions. Still when you try to execute the next line the CLR throws an exception with the following message:

Attempted to read or write protected memory. This is often an indication that other memory is corrupt.

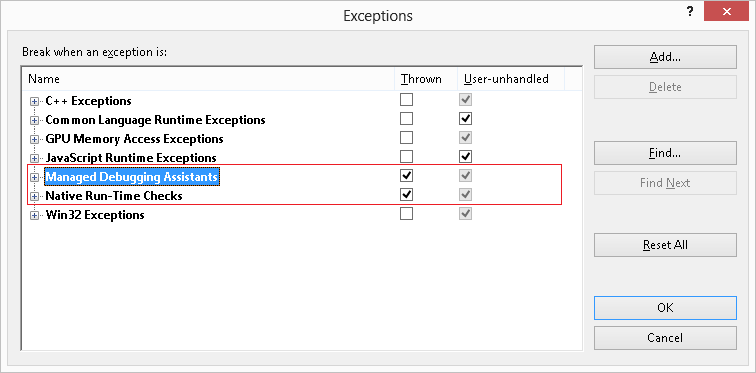

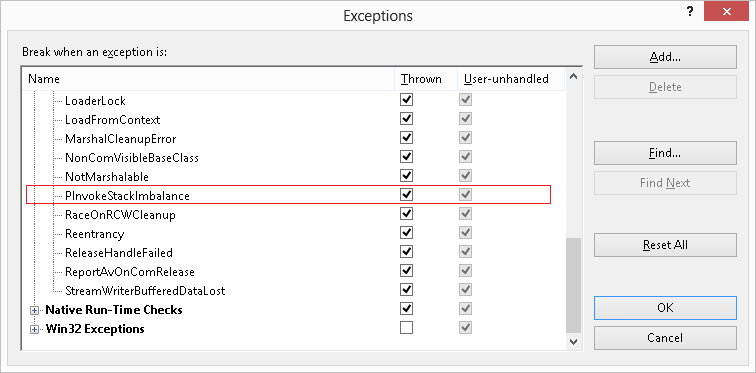

Solution 1: Managed Debugging Assistants

I am usually pessimistic when it comes to MDA but I decided to give it a try. At first I tried MDA from within Visual Studio 2012.

It didn’t work. Then I tried MDA from within windbg. Still no luck. In general my experience with MDA is not positive. It is very limited and works for simple scenarios (e.g. incorrect calling convention) only.

Solution 2: Using Disassembly Window

It does work. In case you are familiar with assembly language this is the easiest way to fix your program. In my case I was lucky and I had to debug a few hundred lines only. The reason was incorrect TRACE_GUID_REGISTRATION definition.

internal struct TRACE_GUID_REGISTRATION

{

private IntPtr guid;

// helper methods

}

This data structure was passed to RegisterTraceGuids function as in/out parameter and there was the stack corruption.

The Fix

A few things are wrong with TraceGuidRegistration definition. The first thing is that TraceGuidRegistration does not define the “handle” field. The second thing is that TraceGuidRegistration is not decorated with StructLayout attribute and this could be crucial. Here comes the correct definition.

[StructLayout(LayoutKind.Sequential)]

internal struct TRACE_GUID_REGISTRATION

{

private IntPtr guid;

private IntPtr handle;

// helper methods

}

Solution 3: FXCop – Using metadata to prevent the bug

Once I fixed the code I started thinking how I can avoid such bugs. I came up with the idea to use FXCop tool which is part from Visual Studio 2010 and later. My intention was to decorate my data structure with a custom StructImport attribute like this:

[StructImport("TRACE_GUID_REGISTRATION", "Advapi32.dll")]

[StructLayout(LayoutKind.Sequential)]

internal struct TraceGuidRegistration

{

private IntPtr guid;

private IntPtr handle;

// helper methods

}

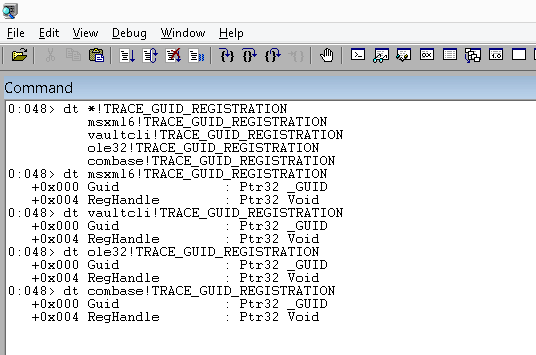

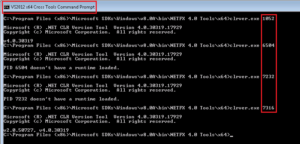

To check whether it is possible I started JustTrace under windbg and loaded the symbols from Microsoft Symbol Server. I was surprised to see that there are four modules that export TRACE_GUID_REGISTRATION and none of them was advapi32.

That’s OK. All I need is the information about TRACE_GUID_REGISTRATION layout. I quickly did a small prototype based on the DIA2Dump sample from DIA SDK (you can find it under <PROGRAM_FILES>\Microsoft Visual Studio 10.0\DIA SDK\Samples\DIA2Dump folder). I embedded the code into a custom FXCop rule and tested it. All works as expected.

After a short break I observed that I could take another approach so I started refactoring my code.

Solution 4: FXCop – Using convention to prevent the bug

The previous solution works just fine. You apply the attribute on the data structures and the FXCop rule will validate if everything is OK. One of the benefits is that now you can name your data structures as you wish. For example you can name it TraceGuidRegistration instead of TRACE_GUID_REGISTRATION. However the two names are practically equal. Also, as I said I was surprised that TRACE_GUID_REGISTRATION is not defined in advapi32 module. As a matter of fact I don’t care where it is defined.

So I decided to do my mappings in slightly different way. Instead of applying StructImport attribute I inspect the signature of all methods decorated with DllImport attribute. For example I can inspect the following method signature:

[DllImport("AdvApi32", CharSet = CharSet.Auto, SetLastError = true)]

static extern int RegisterTraceGuids(

ControlCallback requestAddress,

IntPtr requestContext,

ref Guid controlGuid,

int guidCount,

ref TraceGuidRegistration traceGuidReg,

string mofImagePath,

string mofResourceName,

out ulong registrationHandle);

I know that the fifth parameter has type TraceGuidRegistration so I can try to map it. What is nice of this approach is that I can verify that both the TraceGuidRegistration layout is correct and that the StructLayout attribute is applied. And these were the two things that caused the stack corruption.

Conclusion

Once I refactored my FXCop rule to use convention instead of explicit attribute declaration I start wondering whether such FXCop rules could be provided by Microsoft. So far I don’t see obstacles for not doing so. The task is trivial for all well-known data structures provided by Windows OS. All needed is an internet connection to the Microsoft Symbol Server. I guess the StructImport solution could be applied for any custom data structure mappings. I hope in the future Visual Studio versions Microsoft will prove a solution for such kind of bugs.

Education for Everyone

I guess this is one of those topics without beginning and end. You’ve probably already noticed that there is a shift in the education. The change affects mainly primary and secondary education, college education and undergraduate university education. In my opinion the latter is most affected. Many people perceive it as a decrease in the quality of the education. You’ve probably read such news in your local newspaper or mainstream media. There are reports, studies and no one seems to have clear idea on what to do about it.

I cannot speak about the education as a whole. I can share my thoughts about the undergraduate university education in IT and computer science though. My impressions come mainly from conducting job interviews for my team and from communication with students and interns. The conclusion is that today the education skips some theoretical foundations in favor of more practical knowledge and skills. For example I had 24 main classes during my graduation while today the typical IT/CS students have 32 main classes. The students just don’t have enough time to focus and dive deep into the things. The impact of this is that the current students have faster start as junior software engineers but they need more time to become more proficient.

While some people find the current education insufficient I think it is just different. The education focus has shifted because of the need in the IT industry. In my opinion the lack of the theoretical foundations can be easily compensated with today free online education. Many universities including MIT and Stanford offer free online courses. There is OpenCourseWare Consortium as well. For more advanced things and research one can use Directory of Open Access Journals. There are hundreds of free journals on various topics.

In conclusion, though the most of the existing IT/CS education programs are more focused on the practical knowledge and skills there are a lot of free online resources that can compensate the lack of theoretical foundations. It is up to the students and their will to improve.

CLR Profilers and Windows Store apps

Last month Microsoft published a white paper about profiling Windows Store apps. The paper is very detailed and provides rich information how to build CLR profiler for Windows Store apps. I was very curious to read it because at the time when we released JustTrace Q3 2012 there was no documentation. After all, I was curious to know whether JustTrace is compliant with the guidelines Microsoft provided. It turns out it is. Almost.

At time of writing JustTrace profiler uses a few Win32 functions that are not officially supported for Windows Store apps. The only reason for this is the support for Windows XP. Typical example is CreateEvent which is not supported for Windows Store apps but is supported since Windows XP. Rather one should use CreateEventEx which is supported since Windows Vista.

One option is to drop the support for Windows XP. I am a bit reluctant though. At least such decision should be carefully thought and must be supported by actual data for our customers using Window XP. Another option is to accept the burden to develop and maintain two source code bases – one for Windows XP and another for Windows Vista and higher. Whatever decision we are going to make, it will be thoroughly thought out.

Let’s have a look at the paper. There is one very interesting detail about memory profiling.

The garbage collector and managed heap are not fundamentally different in a Windows Store app as compared to a desktop app. However, there are some subtle differences that profiler authors need to be aware of.

It continues even more interesting.

When doing memory profiling, your Profiler DLL typically creates a separate thread from which to call ForceGC. This is nothing new. But what might be surprising is that the act of doing a garbage collection inside a Windows Store app may transform your thread into a managed thread (for example, a Profiling API ThreadID will be created for that thread)

Very subtle indeed. For a detailed explanation, you can read the paper. Fortunately JustTrace is not affected by this change.

In conclusion, I think the paper is very good. It is a mandatory reading for anyone interested in building CLR profiler for Windows Store apps. I would encourage you to see CLR profiler implementation as well.

Profiling Tools and Standardization

Imagine you have the following job. You have to deal with different performance and memory issues in .NET applications. You often get questions from your clients “Why my application is slow and/or consumes so much memory?” along with trace/dump files produced by profiling tools from different software vendors. Yeah, you guess it right – your job is a tough one. In order to open the trace/dump files you must have installed all the variety of profiling tools that your clients use. Sometimes you must have different versions of a particular profiling tool installed, a scenario that is rarely supported by the software vendors. Add on top of this the price and the different license conditions for each profiling tool and you will get an idea why your job is so hard.

I wish I can sing “Those were the days, my friend” but I don’t think we have improved our profiling tools much today. The variety of trace/dump file formats is not justified. We need a standardization.

Though I am a C++/C# developer, I have a good idea what is going on in the Java world. There is no such variety of trace/dump file formats. In case you are investigating memory issues you will probably have to deal with IBM’s portable heap dump (PHD) file format or Sun’s HPROF. There is a good reason for this though. The file format is provided by the JVM. The same approach is used in Mono. While this approach is far from perfect it has a very important impact on the software vendors. It forces them to build their tools with a standardization in mind.

Let me give you a concrete example. I converted the memory dump file format of the .NET profiler I work on to be compatible with HPROF file format and then I used a popular Java profiler to open it. As you may easily guess, the profiler successfully analyzed the converted data. There were some caveats during the converting process, but it is a nice demonstration that with the proper level of abstraction we can build profiling tools for .NET and Java at the same time. If we can do this then why don’t we have a standardization for trace/dump files for .NET?

In closing, I think all software vendors of .NET profiling tools will benefit from such standardization. The competition will be stronger which will lead to better products on the market. The end-users will benefit as well.

Rants on Education

In this post I am going to share my thoughts about the education and some of the current educational approaches. I was provoked by an article by I. V. Arnold published in the Russian magazine Математическое просвещение year 1936, issue #8.

The article was about choosing a winning strategy for the following game (I don’t know the name of the game so if you know it please drop a comment; I think the game origin is from Japan or China). Here is the game: there are two players and two heaps of objects. Let’s call them player A, player B, heap A (contains a>0 objects) and heap B (contains b>0 objects) respectively. Each player takes a turn and removes objects from the heaps according the following rules:

- at least one object should be removed

- a player may remove any number of objects from either heap A or heap B

- a player may remove equal number of objects from both heap A and heap B

Player A makes the first turn and the player who takes the last object(s) wins the game. Knowing the numbers a and b you should decide whether to start the game as a player A or to offer the first turn to your opponent.

Sometimes this game is given as a problem in mathematical competitions (or less rare in informatics ones). When I was at school we studied this game in math class. Back then I was taught a geometric based approach that chooses a winning strategy. I will skim the solution here without going in much details because this is not the purpose of the post.

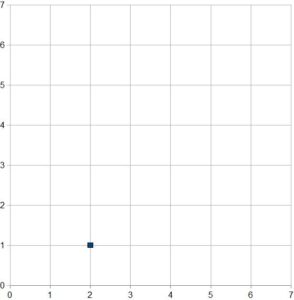

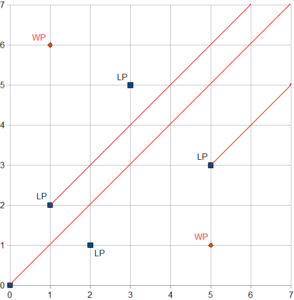

As the game state is defined by the numbers a and b we can naturally denote it with the ordered pair (a, b). Then we can naturally present the game state on a coordinate system. Let’s say a=2, b=1 so we have (2, 1) point on the coordinate system and let’s put a pawn on this point.

According the rules we may move the pawn left, down and diagonally towards (0, 0) point. It’s easy to see that it is not possible to win the game if we start from position (2, 1) so let’s define position (2, 1) as a loosing position (LP). I am going to provide the list of all possible moves:

- (2, 1) → (2, 0). The next move (2, 0) → (0, 0) wins the game

- (2, 1) → (1, 1). The next move (1, 1) → (0, 0) wins the game

- (2, 1) → (0, 1). The next move (0, 1) → (0, 0) wins the game

- (2, 1) → (1, 0). The next move (1, 0) → (0, 0) wins the game

Point (0, 0) should be also considered as a loosing position. We notice that if (a, b) is a loosing position then (b, a) is also a loosing position. To find the next loosing position we have to draw all horizontal, vertical and diagonal lines from all existing loosing points and find the first point not laying on those lines.

Using this approach we see (5, 3) is a loosing position while (5, 1) is a winning position (WP). The strategy is already obvious – if the starting position is LP we should offer the first turn to our opponent, otherwise we should start the game and move the pawn to a loosing position.

So I was given an algorithm/recipe how to play and win the game. Back then my teacher said that the game is related to the Fibonacci numbers but she did not provide additional information. It wasn’t until I read the article mentioned above and I’ve finally understood the relation between the game and the Fibonacci number (and the golden ratio in particular).

Back to the main topic of this post. 20 years ago my teacher taught me how to solve particular math problem. I was given an algorithm/recipe that explains the winning game strategy. Indeed it is true that I didn’t know why exactly the algorithm works but still it was presented in a very simple and efficient way. In fact I knew it for the last 20 years. This triggered some thoughts about here-is-the-recipe kind of eduction.

It seems that here-is-the-recipe kind of education works pretty well. My experience tells me so. Most of the time I’ve worked in a team and I had plenty of chances to ask my colleagues about things in their expertise. Sometimes I was given an explanatory answer but most of the time I was given a sort of this-is-the-way-things-are-done answer. And it works. So I believe that here-is-the-recipe kind of education is the proper one for many domains. Not surprisingly these domains are well established, people have already got the know-how and structure it. Let me provide some examples.

One example are design patterns. Once a problem is recognized as a pattern a solution is applied. Usually applying the solution does not require much understanding and can be done by almost everyone. Sometimes applying the solution requires further analysis and the if not done properly the result could be messy. This is very well realized by the authors of AntiPatterns: Refactoring Software, Architectures, and Projects in Crisis. They talk about 1980’s when there were a lack of talented architects and a disability of the academic community to provide detailed knowledge in problem solving. I think that nowadays the situation is not much different. One thing for sure – today the IT is more dynamic than ever. Big companies rule the market. They usually release new technologies every 18-24 months. Many small companies play on the scene as well. They (re)act much faster. Some technologies fail, some succeed. It is much like Darwin’s natural selection. The academic education can not respond properly. Fortunately today’s question-and-answer community web sites fill that gap especially for the most common problems.

Another example are the modern frameworks and components. I’ve seen many people using them successfully without much understanding the foundations. Actually this is arguable because one of the features of a good framework/component is that one can use it without knowing how it works. Of course sometimes it is not that easy. Sometimes using a framework requires a change in the mind set of the developer and it takes some time. In such cases the question-and-answer community web sites often recommend not the best (sometimes even bad) practices. I guess it all depends on the speed of adopting the framework. If many people embrace the new framework/technology the best practices are established much faster.

On the other side sometimes here-is-the-recipe kind of education does not work. As far as can I tell this happens for two major reasons.

The first one is when a technology/framework is replaced by a newer one. It could be because it is too complex and/or it contains design flaws or whatever. One such example is COM. I remember two books by Don Box: Essential COM (1998) and Essential .NET (2002). The first one opens with “COM as a Better C++” while the second one opens with “The CLR as a Better COM”. Back then the times were different and four years were not a long period. Although COM was introduced in 1993 the know-how about it was not well structured.

The second reason is when the domain is too specific and/or too rapidly changing. In case of too specific domain there is not enough public know-how. The best practices usually are not established at all. In case of rapidly changing domain even if there is a know-how there is not enough time to structured it.

Of course no teaching/education methodology is perfect. So far I think the existing ones do their job as expected. Though there is a lot of space for improvement. Recently I met a schoolmate of mine who is a high school Informatics teacher. We had an interesting discussion on this topic and I will share it in another post. Stay tuned.

Invention and Innovation

This is an old topic but I would like to write a post on it. One of the common arguments is that an idea that doesn’t change the behavior of the people is only an invention, not an innovation. Here is a quote from Wikipedia:

Innovation differs from invention in that innovation refers to the use of a better and, as a result, novel idea or method, whereas invention refers more directly to the creation of the idea or method itself.

In our history you can find many examples for inventions that become innovations after years or centuries. Sometimes the inventions are made too early. Typical examples are the radio and the TV. For other ideas the transformation from invention to innovation takes only a few days. Last but not least, there are innovative ideas as well.

Often the inventor and the innovator are different persons. In this post I am going to focus on the information and knowledge spreading between the people that is needed to transform an invention to an innovation.

Today there is a clear connection between the inventors and the innovators. Usually they are both professionals in the same or closely related fields. For example I am not aware of an innovation made by a journalist that is based on an invention made by a medical doctor. I guess there are examples for such cross-field innovations but they are not the majority.

Because these information and knowledge flows are quite narrowed I start wondering how much innovation opportunities are missed. The naive approach is to use internet for sharing. There are many web sites that serve as idea incubators/hubs but I think these sites alone are not enough. The main problem of these sites is that currently the ideas are not well ordered and classified. One possible solution is to use wikipedia.org site. The main advantages are:

- a single storage point

- a lot of people who are already contributing

Volunteer computing projects, such as SETI@home, might be of a use as well. Automatic text classification is a well developed area though it cannot replace human experts yet.

Online games such as Foldit should be considered as well. If there are games that offer both fun and sense of achievement then they might attract a lot of people to classify ideas and actually generate innovations and other ideas.

As a conclusion I think that with the technologies and the communications available today and a little effort it is easy to build a healthy environment for generation of innovations.

Software Lifespan

Last weekend a friend of mine told me an interesting story. Some software on a build server had stopped working unexpectedly. It turned out the software in question expired after 10 years! Yep, you read it right. On top of this as it turned out the company that made the software does not exist anymore. It took 5 days for the company my friend work for to find a contact of the author of the software. Fortunately the solution was easy – they had to uninstall the software, delete a “secret” key in the registry and then install the software again.

This story reveals some interesting things:

1) a software written in 2002 is still in use today

2) the way we do differentiate between a trial and a non-trial software

We all know the mantra that we do not have to make assumption how long our software will be in use. Out of curiosity I asked my friend how it happens to have a build machine that is 10 years old. It turned out it was a Windows XP machine that was migrated to a virtual server many years ago. Interesting, don’t you think? The hardware lifespan is much shorter than the software lifespan.

For comparison, Windows XP was released in 2001 but it doesn’t expire as the software in question. I can easily imagine how the particular expiration check is implemented. During the installation it takes the current date and if a valid serial key is entered it adds 10 years, otherwise it adds, say, 14 days. The interesting thing is the idea that the software won’t be used after 10 years. Back then, I would probably implement that expiration check in a similar way. In the best case I would probably add 20 instead of 10 years. I remember those days very well; all the dynamics and expectations after the dot-com bubble. My point is that for the small software vendors a 10 years period was equal to eternity. For companies like Microsoft a 10 years period is just another good step (at the time of writing different sources estimate that Windows XP has between 22% and 35% of the OS market share).

Why do we need profiling tools?

Every project is defined by its requirements. The requirements can be functional and non-functional. Most of the time the developers are focused on functional requirements only. This is how it is now and probably it won’t change much in the near future. We may say that the developers are obsessed by the functional requirements. In the matter of fact, a few decades earlier the software engineers thought that the future IDE will look something like this:

This is quite different from nowadays Visual Studio or Eclipse. The reason is not that it is technically impossible. On contrary, it is technically possible. This is one of the reasons for the great enthusiasm of the software engineers back then. The reason this didn’t happen is simple. People are not that good at making specifications. Today no one expects to build large software by a single, huge, monolithic specification. Instead we practice iterative processes, each time implementing a small part of the specification. The specifications evolve during the project and so on.

While we still struggle with the tools for functional requirements verification we made a big progress with the tools for non-functional requirements verification. Usually we call such tools profilers. Today we have a lot of useful profiling tools. These tools analyze the software for performance and memory issues. Using a profiler as a part from the development process can be a big cost-saver. Profilers liberate software engineers from the boring task to watch for performance issues all the time. Instead the developers can stay focused on the implementation and verification of the functional requirements.

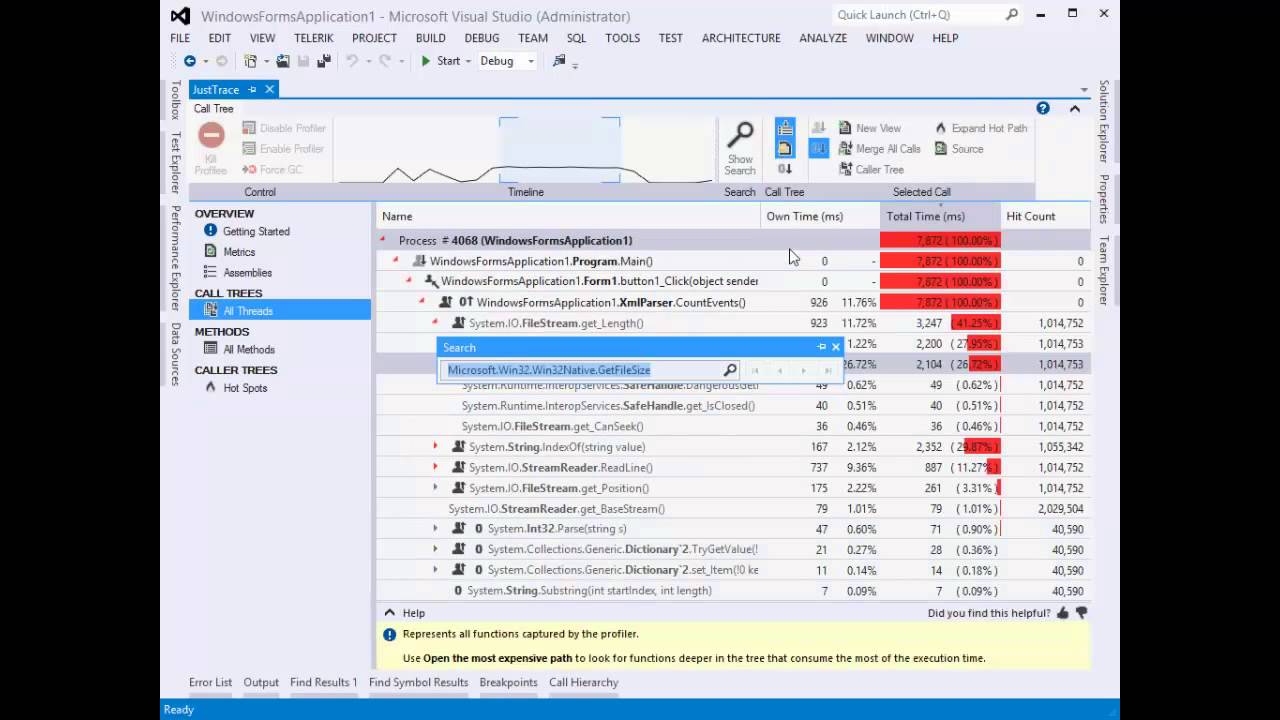

Take for example the following code:

FileStream fs = …

using (var reader = new StreamReader(fs, ...))

{

while (reader.BaseStream.Position < reader.BaseStream.Length)

{

string line = reader.ReadLine();

// process the line

}

}

This is a straightforward and simple piece of code. It has an explicit intention – process a text file reading one line at a time. The performance issue here is that the Length property calls the native function GetFileSize(…) and this is an expensive operation. So, if you are going to read a file with 1,000,000 lines then GetFileSize(…) will be called 1,000,000 times.

Let’s have a look at another piece of code. This time the following code has quite different runtime behavior.

string[] lines = …

int i = 0;

while (i < lines.Length)

{

...

}

In both examples the pattern is the same. And this is exactly what we want. We want to use a well-known and predictive patterns.

Take a look at the following 2 minutes video to see how easy it is to spot and fix such issues (you can find the sample solution at the end of the post).

After all, this is why we want to use such tools – they work for us. It is much easier to fix performance and memory issues in the implementation/construction phase rather than in the test/validation phase of the project iteration.

Being proactive and using profiling tools during the whole ALM will help you to build better products and have happy customers.

Enumerate managed processes

If you ever needed to enumerate managed (.NET) processes you probably found that this is a difficult task. There is no single API that is robust and guarantees correct result. Let’s see what the available options are:

- GetVersionFromProcess. This function has been deprecated in .NET 4

- ICLRMetaHost::EnumerateLoadedRuntimes. The MetaHost API was introduced in .NET 4

- Managed Debugging API

- Performance Counters

- Enumerating loaded Win32 modules (heuristics)

You can take a look at the following examples:

- Listing of Managed Processes

- Enumerating Managed Processes

- How to enumerate the managed processes and AppDomains

Unfortunately, each one of these approaches has a hidden trap. You cannot enumerate 64bit processes from a 32bit process and you cannot enumerate 32bit processes from a 64bit process. After all, it makes sense because most of these techniques rely on reading another process memory.

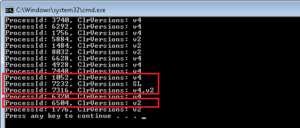

This is a well-known issue. Microsoft has provided a tool called clrver.exe that suffers from the same problem. The following screen shots demonstrate it.

As we can see the 32bit clrver.exe can enumerate 32bit processes only. If you try it on 64bit process you get the error “Failed getting running runtimes, error code 8007012b”. The same is valid for the 64bit scenario. The 64bit clrver.exe can enumerate 64bit processes only. If you try it on 32bit process you get “PID XXXX doesn’t have a runtime loaded”.

A lot of people tried to solve this issue. The most common solution is to spawn a new process on 64bit Windows. If your process is 32bit then you have to spawn a 64bit process and vice versa. However, this is a really ugly “solution”. You have to deal with IPC and other things.

However, there is a Microsoft tool that can enumerate both 32bit and 64bit managed processes. This is, of course, Visual Studio. In the Attach to Process dialog you can see the column “Type” that shows what CLR versions are loaded if any.

I don’t know how Visual Studio does the trick so I implemented another solution. I am not very happy with the approach that I used, but it seems stable and fast. I also added support for Silverlight (see process 7232).

You can find a sample source code at the end of the posting.