Every time we build software we have functional requirements. Maybe these functional requirements are not well defined but they are good enough to start prototyping and you refine them as you go. After all, the functional requirements describe what you have to build. Sometimes, you have additional requirements that describe how your software system should operate, rather than how it should behave. We call them non-functional requirements. For example if you build a web site, a non-functional requirement can define the maximum page response time. In this post I am not going to write about the SPE approach, but rather about the more general term of software performance engineering.

Context

Anytime a new technology emerges people want to know how fast it is. We seem obsessed with this question. Once we understand the basic scenarios where a new tech is applicable we start asking questions about its performance. Unfortunately, talking about performance is not easy because of all misunderstanding around it.

Let’s set up some context. With any technology we are trying to solve some problem, not everything. In some sense, any technology is bound to the time. In general, nowadays we solve different problems than 10 years ago. This is so obvious in the IT industry as it changes so fast. Thus, when we talk about given technology it is important to understand where it comes from and what are the problems it tries to solve. Obviously, a technology cannot solve all current problems and there is no best technology for everything and everybody.

We should understand another important aspect as well. Usually when a new technology emerges it has some compatibility with an older one. We don’t want people to learn again how to do trivial things. Often this can have big impact on performance.

Having set up the context, it should be clear that the interpreting performance results is also time bound. What we have considered fast 5 years ago, may not be fast any longer. Now, let’s try to describe informally what performance is and how we try to build performant software. Performance is a general term describing various system aspects such as operation completion time, resource utilization, throughput, etc. While it is a quantitative discipline it does not define itself any criterion what is good or bad performance. For the sake of this post, this definition is good enough to understand why performance is important in say, algorithmic trading. In general, there is a clear connection between performance and cost.

IT Industry-Education Gap

Performance issues are rarely measured in a single value (e.g. CPU utilization) and thus they are difficult to understand. These problems become even more difficult in distributed and parallel systems. Despite the fact that performance is important and difficult problem, most universities and other educational facilities fail to prepare their students so they can avoid and efficiently solve performance issues. The IT industry has recognized this fact and there are companies like Pluralsight, Udacity and Coursera that offer additional training on this topic.

In the rare cases where students are taught on localizing and solving performance issues, they use outdated textbooks from ’80s. Current education cannot produce well-trained candidates mostly because the teachers have outdated knowledge. On the other hand, many (online) education companies offer highly specialized performance courses in say, web development, C++, Java or .NET, which cannot help students to understand the performance issues in depth.

Sometimes the academia tries to help the IT industry providing facilities like cache-oblivious algorithms or QN models but abstracting real hardware often produces suboptimal solutions.

Engaging students in real-life projects can prepare them much better. It doesn’t matter whether it is an open-source project or a collaboration with the industry. At present, students just don’t have the chance to work on a big project and thus miss the opportunity to learn. Not surprisingly the best resources on solving performance issues are various blogs and case studies from big companies like Google, Microsoft, Intel and Twitter.

Performance Engineering

Often software engineers have to rewrite code or change system architecture because of performance problems. To mitigate such expensive changes, many software engineers try to employ various tools and practices. Usually, these practices can be formalized in the form of an iterative process which is part from the development process itself. A common simplified overview of such iterative process might be as follows:

- identify critical use cases

- select a use case (by priority)

- set performance goals

- build/adjust performance model

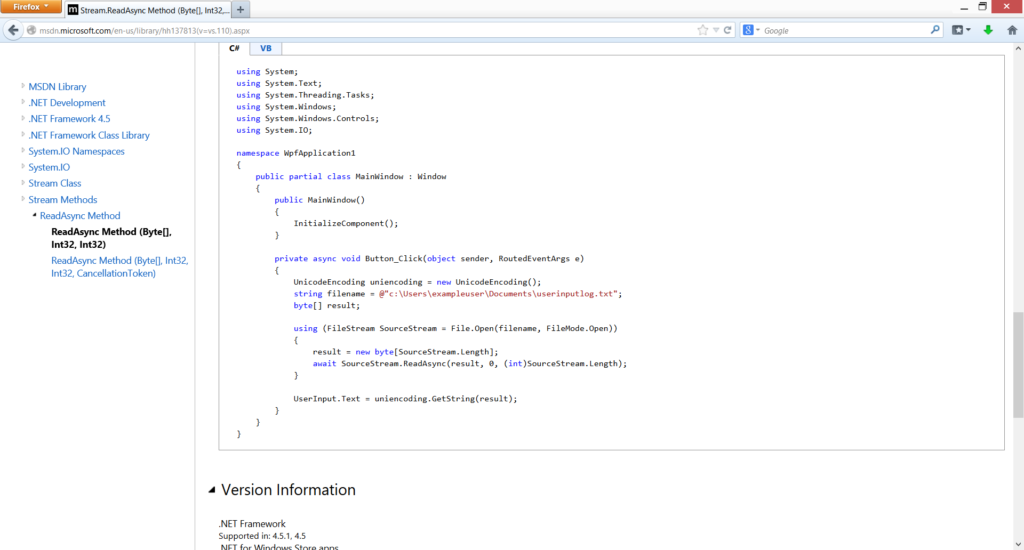

- implement the model

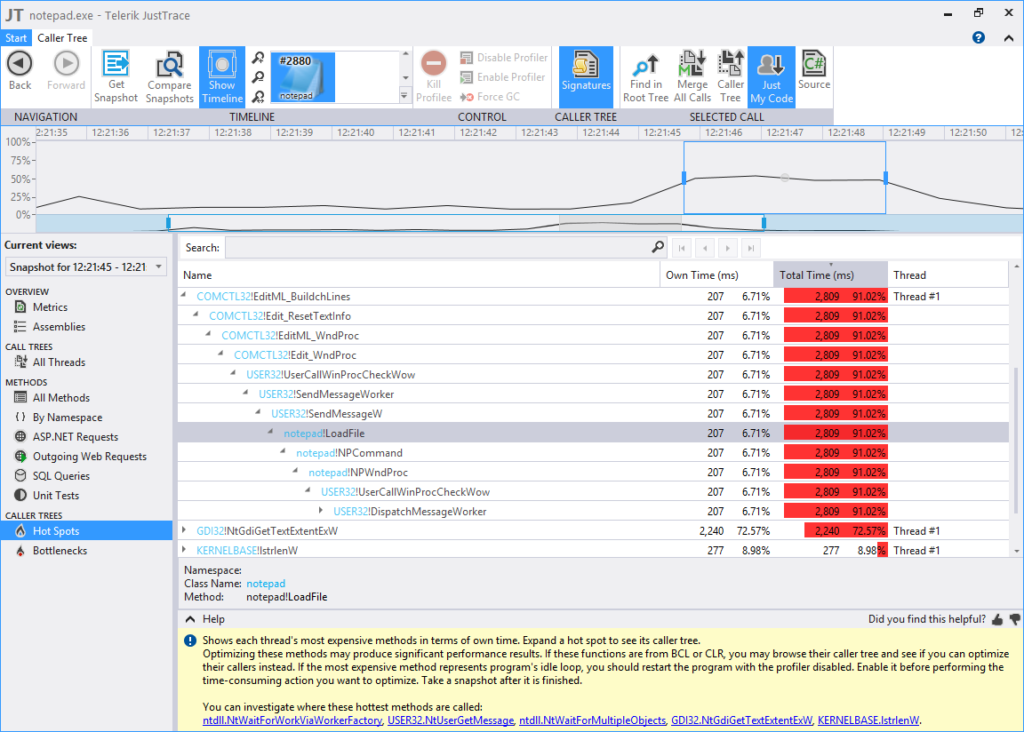

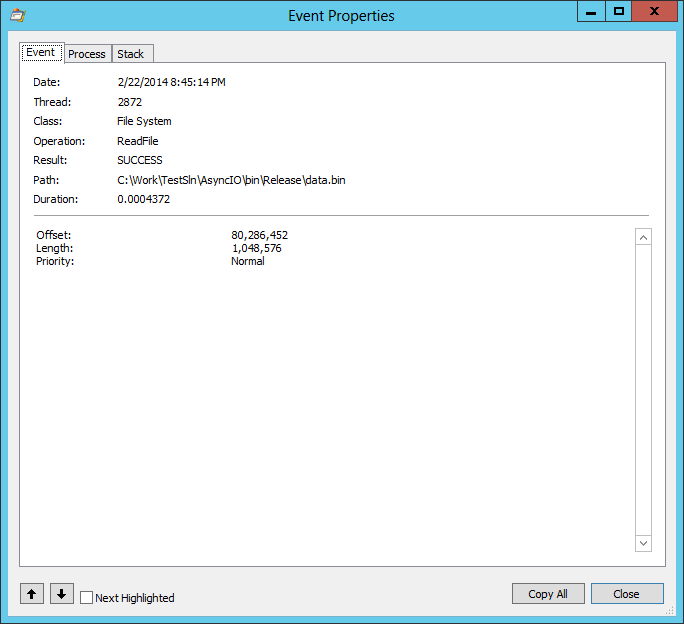

- gather performance data (exercise the system)

- report results

Different performance frameworks/approaches emphasize on different stages and/or techniques for modelling. However, what they all have in common is that it is an iterative process. We set up performance goals and perform quantitative measurements. We repeat the process until we meet the performance goals.

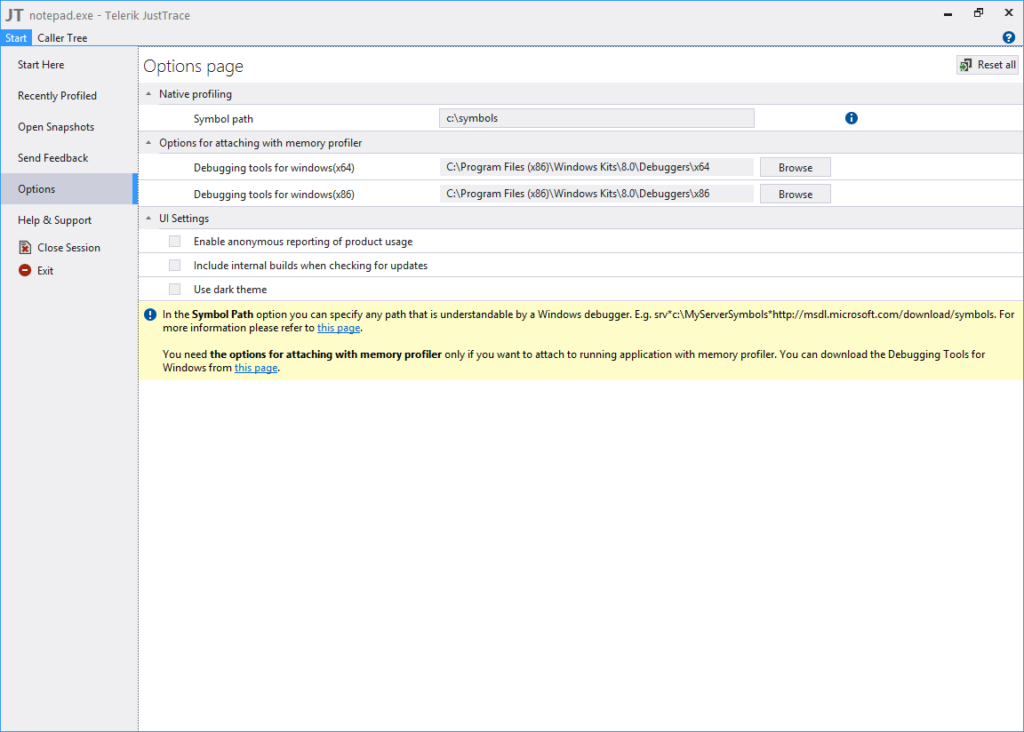

Most performance engineering practices heavily rely on tools and automation. Usually, they are part from various CI and testbed builds. This definitely streamlines the process and helps the software engineers. Still, there is a big caveat. Building a good performance model is not an easy task. You must have a very good understanding of the hardware and the whole system setup. The usual way to overcome this problem is to provide small, composable model templates for common tasks so the developer can build larger and complex models.

In closing I would say that there isn’t a silver bullet when it comes to solving performance issues. The main reason to make solving performance issues difficult is that it requires a lot of knowledge and expertise from both software and hardware areas. There are a lot of places for improvement both in the education and the industry.